Digital Deception: When AI Deepfakes Hijack Celebrity Reputations

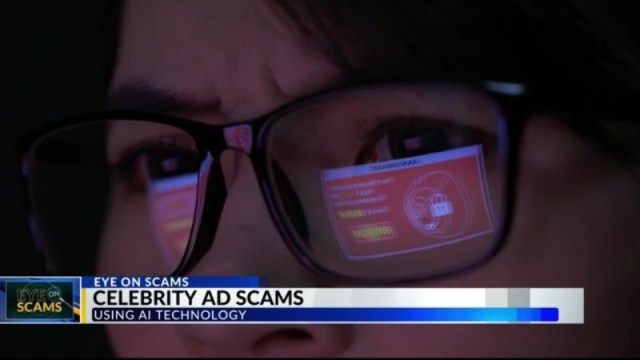

In the age of digital deception, scammers have found a powerful new weapon: artificial intelligence. These tech-savvy fraudsters are now weaponizing AI image generators to create hyper-realistic fake images of beloved celebrities and social media influencers, all designed to trick unsuspecting fans into believing their favorite stars are endorsing products.

These sophisticated digital forgeries look incredibly convincing, featuring celebrities seemingly promoting everything from miracle health supplements to get-rich-quick investment schemes. By leveraging cutting-edge AI technology, criminals can now generate images so lifelike that even the most discerning viewers might be momentarily fooled.

The goal is simple yet insidious: exploit your trust and admiration for public figures to manipulate you into making impulsive purchases or sharing personal information. These AI-generated deepfakes blur the line between reality and fiction, making it increasingly challenging for consumers to distinguish genuine endorsements from elaborate scams.

Experts warn that as AI technology continues to advance, these deceptive tactics will only become more sophisticated. Consumers are advised to remain vigilant, verify product endorsements through official channels, and approach too-good-to-be-true advertisements with healthy skepticism.