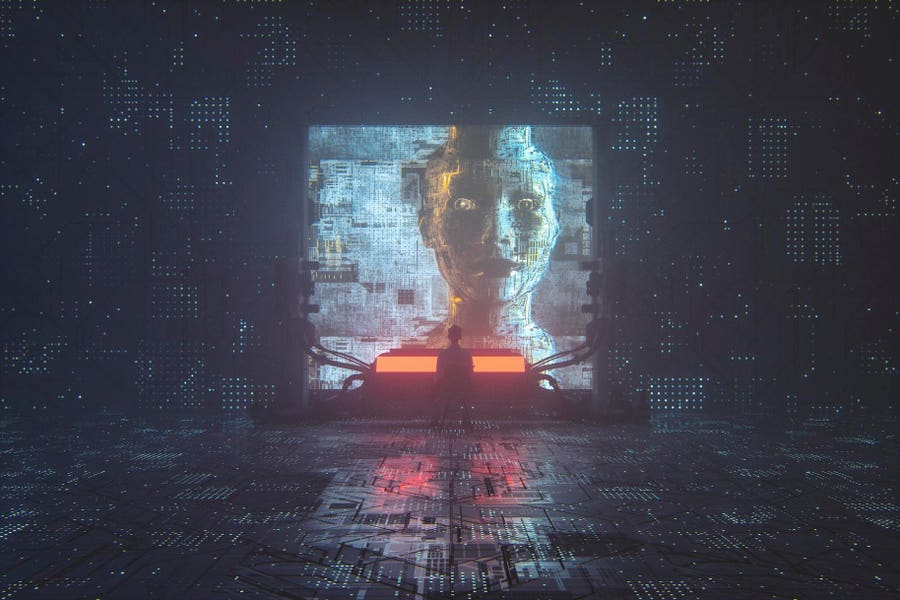

Silent Threat: How Emotional AI Could Be Unraveling Mental Health Before Our Eyes

In the rapidly evolving landscape of artificial intelligence, a profound ethical dilemma is emerging: Are emotional AI and chatbots genuine support tools, or are they creating a dangerous illusion of human connection?

As technology advances, sophisticated chatbots are increasingly positioned as mental health companions, promising instant empathy and round-the-clock support. However, beneath their seemingly compassionate algorithms lies a troubling reality. These AI systems, while programmed to mimic understanding, fundamentally lack the nuanced emotional intelligence and genuine human warmth essential for meaningful psychological care.

The rise of synthetic empathy presents a complex challenge. While chatbots can provide immediate responses and basic emotional triage, they risk replacing authentic human interaction with a sterile, algorithmic substitute. Mental health professionals warn that over-reliance on these digital interfaces could potentially exacerbate feelings of isolation and disconnection, particularly among vulnerable populations.

Research suggests that human emotional support requires subtle contextual understanding, emotional resonance, and adaptive compassion—qualities that current AI technologies cannot genuinely replicate. The danger lies not just in the limitations of these systems, but in the potential normalization of impersonal, technology-mediated emotional support.

As we navigate this technological frontier, critical questions demand urgent examination: Can artificial empathy ever truly substitute human connection? And at what psychological cost are we pursuing these seemingly convenient technological solutions?

The future of mental health support hinges on striking a delicate balance between technological innovation and preserving the irreplaceable human element of care.